Antlr4 is a new iteration of a popular Antlr parse tree generator. Antlr4 features great documentation and an in-depth book on the subject. However, the topic of autocompletion lacks any substantive material. I hope this article will steer you in the right direction if you are looking to implement autocomplete functionality using Antlr4.

What’s Autocomplete?

Assume the following grammar:

// Parser

query: Count Fruits EOF;

// Lexer

Count: [Cc] [Oo] [Uu] [Nn] [Tt];

Fruits: "[0-9a-zA-Z]";

Ws: [\s] -> skip;

This grammar would produce a parser that would match the word “count” followed by any alphanumeric string in quotes, both strings are case insensitive, and any number of spaces between the two words are acceptable. For examples: count “apples”

Now, assume we want to present our users with a GUI input field, and then do two things. First, we want to make sure that whatever they type in is a valid input according to the grammar defined above. And second, as the user is typing we want to show suggestions about what can be typed next.

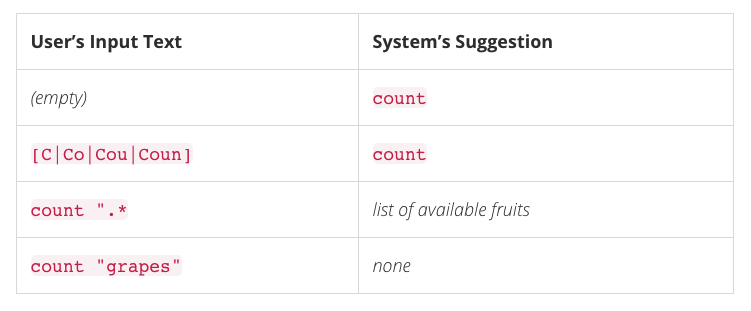

Let me explain the second point a bit more with a table of some permutations:

From the table above, we can see that autocomplete tries to suggest possible input based on the current parser and lexer token (more about this a bit later). Note, the list of fruits for the Fruits token would probably come from some sort of a storage, in-memory, backend, whatever it might be. While the Count token can be suggested as is.

Below we will focus on Fruits Rule and how to create an autocomplete for it.

Autocomplete & Errors – Two Good Friends

An important point to understand is that autocompletion assumes an invalid user input according to the defined grammar. That is, if we try to validate a partial (incomplete) user’s input, the parser will throw an error. If an error was thrown, we should be able to figure out where parser stopped, what rule or token was expected when the execution stopped and what text the parser was unable to match according our grammar.

So, errors you say, right? How about we hook into these errors, take a look around and see if we can create autocomplete functionality based on the errors the parser produces.

A side note. Code samples below are written in Javascript. Antlr4 has a Javascript target that will convert your grammar into Javascript code. The language choice is also dictated by the task – autocompletion is primarily a frontend job, and Javascript is a frontend language. Besides, it’s really cool to be able to validate grammar right in the browser, avoiding the server side altogether.

Another Side note. The examples below should easily translate to the language of your choice if there is a need.

Let’s begin by creating an error listener:

var ErrorListener = require('antlr4/error/ErrorListener').ErrorListener;

function TestGrammarErrorListener() {

ErrorListener.call(this);

this.partialFruit = null;

this.errors = [];

return this;

}

TestGrammarErrorListener.prototype = Object.create(ErrorListener.prototype);

TestGrammarErrorListener.prototype.constructor = TestGrammarErrorListener;

Here, we import the standard Antlr4 Error listener, and subclass it with our own TestGrammarErrorListener. Important points to notice:

partialFruit property – will hold the string that the parser failed on

errors array – will hold a list of errors that the ErrorListener caught.

I will not explain how to attach the error listener to your parser or tree walker. There are plenty of examples of that.

Now, that we have the error listener created and attached to our parser, we need to do one important change – override the actual error handling method.

TestGrammarErrorListener.prototype.syntaxError = function(recognizer, offendingSymbol, line, column, msg, e) {

this.errors.push(arguments);

this.partialFruit = null;

var typeAssistTokens = ["Fruits"];

var parser = recognizer._ctx.parser,

tokens = parser.getTokenStream().tokens;

// last token is always "fake" EOF token

if (tokens.length > 1) {

var lastToken = tokens[tokens.length - 2],

tokenType = parser.symbolicNames[lastToken.type];

this.tokenType = tokenType;

if (typeAssistTokens.indexOf(tokenType) >= 0) {

this.partialFruit = lastToken.text;

}

}

};

Here we override syntaxError method, that is called every time (should be at most once per parse job) the parser encounters a mismatch in input according to the grammar. Let me explain the code a bit more.

typeAssistTokens is a list of tokens which will activate our type assist. See the grammar (tokens are the left side of the rule definitions – everything before a colon).

Then, we ask the parser to give us a list of tokens that were parsed. We take the last token – that’s the token where the parser failed and stopped. Then we need to check the type of the last token. If it’s of type we’re interested in (Fruits in this case), then we extract the text from that token and that’s the text we can use for type assist. That text is assigned to partialFruit property.

So, if partialFruit is not null, then we need to active type assist. Fire off some ajax requests, do some wildcard queries to the database, or something of this nature. Additionally, you can use tokenType property to differentiate between different type assist sources, when your grammar gets more complex.

Help! Nothing works!

There are a few interesting quirks I encountered along the way. Check them out before giving up on parsers and taking out your regular expression hack tool.

My ErrorListener Doesn’t Consistently Trigger

I am glad you asked. Take a look at the grammar above once more. See the EOF token? That’s a special magical token to tell the lexer to parse everything, and I mean this time for real, everything, no cheating. Lexer’s behaviour is somewhat odd. It tries so hard to find a token in user’s input that sometimes it goes too far and leaves out some trailing input as long as everything before it matched. I didn’t dig any deeper for a better explanation. If you have it, please let me know. However, adding EOF to the end of your grammar should do the trick, and probably break some other things along the way 🙂

I Get a Wrong Token in Error Listener

So, your syntaxError method is triggered, but the token you get there is not the token you’re expecting. Say, you expected the Fruits token, but you got the Count token instead.

The problem lies in the Lexer again. The Lexer will try to match any of the rules against the input it has, without any knowledge of precedence of these rules – that’s Parser’s job. So, if you have a very liberal token, say: SomeRule: (\w+); the Lexer will match some of your input according to that rule, but the parser will fail at that, because that’s not the rule it expected.

How do you solve the problem? Well, avoid very liberal rules if you can. If you cannot, as it’s probably the case, then hide liberal rules in lexing modes.

Lexing modes, essentially split your lexing rules into isolated namespaces or sublexers. Anything inside a sublexer is not visible to the main lexer. You can activate sublexers based on some trigger conditions. For instance, in our Fruits rule above, we can active the sublexer once an opening quote is encountered, and exit the sublexer, once the closing quote is encountered. And then we can move out the Fruits regular expression into the sublexer, thus avoiding a liberal lexing rule in the global lexing space.

This trick will ensure that you get the right tokens in your syntaxError method.

Conclusion

Autocomplete functionality with Antlr4 turned out to be quite easy to implement and is very scalable in terms of differentiating between different autocomplete sources based directly on grammar rules. The entire autocomplete logic resides right in the browser which provides you with flexibility to trigger autocomplete as you see fit. On the other hand, Antlr4 ships other language targets, meaning, that if there is ever a need, autocomplete functionality can easily be moved to the server side. This can prove to be useful, should your grammar grow in size and complexity and the frontend is not performant enough to cater for those needs, or if you are willing to keep your parser completely closed source.