Getting users to submit bug reports can take time, energy, and thus requires a strong desire for the consumer to act upon. For developers, it means that it may take more time to be notified of a bug. Not everyone is a power user who will report odd things, especially those that are not mission critical.

Here at Komand, we came up with a neat little solution to make reporting bugs easier for our users. To do this we must take some of the work out of the reports. Tasks such as bug notifications (often via e-mail), retrieval of system information, and ticketing comes to mind as processes that can be automated to achieve our goal.

Luckily, we can spin up a few services to help orchestrate our plan. We will rely on AWS services S3 and Lambda, as well as some simple scripts we wrote to collect and upload our information.

The general workflow becomes:

- User submits bug report via user interface

- A script begins archiving related system and service information

- Encryption of archive

- The archive is uploaded to S3

- Lambda receives an upload event from S3

- Lambda executes code to create a ticket and attaches the archive

- Our team handle the ticket and investigates

S3:

S3 can be used as a reasonably low cost solution to store files such as bug reports. The idea here is that when users click, or run a command, to generate a bug report, the relevant information is uploaded to the bucket. We have a few requirements though, for starters not everyone should be able to write to the bucket. This would open us up to abuse and possibly a costly bill. In addition, not everyone can download bug reports. The reports contain information about customer systems and should be treated as confidential.

We can employ a universal way to authenticate our users to the bucket. Unfortunately, S3 authentication is not very robust so we have to rely on the what you know factor with HTTP headers. It's not foolproof but it's a risk that we accept. The policy at a high level requires the following

- HTTPS for all bucket requests

- A token as the User Agent for authentication

- A specific referrer as a second level of authentication

- Log all transactions

The bug reporting code shipped with the product must keep this information safe but available because it will be used to authenticate users for uploads.

We use different tokens for access control. The first token is used for uploading (s3:PutObject) via HTTP PUT requests. The second token is for our team to retrieve (s3:GetObject) via HTTP GET method and manage the files in the bucket (s3:DeleteObject) via HTTP DELETE method. The tokens can only be used to perform their respective tasks.

Terraform, another great HashiCorp product, can be used to build out the bucket and its policies in an reproducible manner. It's very useful as a infrastructure as code tool. The following example illustrates how to build two S3 buckets, one for our bug reports, and the other for storing the logs of our bug report bucket.

provider "aws" {

access_key = "${var.aws_access_key}"

secret_key = "${var.aws_secret_key}"

region = "${var.region}"

}

resource "aws_s3_bucket" "log_bucket" {

bucket = "${var.bucket_name}_logs"

acl = "log-delivery-write"

force_destroy = true

lifecycle_rule {

id = "log_lifecycle"

prefix = "/logs"

enabled = true

expiration {

days = 90

}

}

}

resource "aws_s3_bucket" "report_bucket" {

bucket = "${var.bucket_name}"

acl = "private"

policy = "${file("report_policy.json")}"

tags {

Name = "Bug Reports"

Environment = "Production"

}

logging {

target_bucket = "${aws_s3_bucket.log_bucket.id}"

target_prefix = "log/"

}

force_destroy = true

lifecycle_rule {

id = "reports_lifecycle"

prefix = "/"

enabled = true

expiration {

days = 30

}

}

}

We referenced a JSON policy file in the configuration above. In that file we define the aforementioned access control rules. The policy goes so far to even disallow management of the bucket from theS3 web interface. Instead, we wrote a command-line tool to authenticate using token2 so we can manage the bucket more efficiently. Mainly, allowing us to quickly clean out old reports.

The configuration may look a bit convoluted and order is everything. It took a bit of tinkering to get it just right for our purposes.

{

"Version": "2012-10-17",

"Id": "bug_report policy",

"Statement": [

{

"Sid": "Allow specific upload from anyone with correct setup",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:PutObject",

"Resource": "arn:aws:s3:::bug_reports/*",

"Condition": {

"StringEquals": {

"aws:UserAgent": "token"

},

"StringLike": {

"aws:Referer": "http://referrer"

},

"Bool": {

"aws:SecureTransport": "true"

}

}

},

{

"Sid": "Deny all other requests not meeting conditions",

"Effect": "Deny",

"Principal": "*",

"NotAction": "s3:PutObject",

"Resource": "arn:aws:s3:::bug_reports/*",

"Condition": {

"StringNotLike": {

"aws:Referer": "http://referrer"

},

"StringNotEquals": {

"aws:UserAgent": [

"token1",

"token2"

]

}

}

},

{

"Sid": "Allow basic management",

"Effect": "Allow",

"Principal": "*",

"Action": [

"s3:GetObject",

"s3:DeleteObject"

],

"Resource": "arn:aws:s3:::bug_reports/*",

"Condition": {

"StringEquals": {

"aws:UserAgent": "token2"

},

"Bool": {

"aws:SecureTransport": "true"

}

}

},

{

"Sid": "Deny all but basic management",

"Effect": "Deny",

"Principal": "*",

"NotAction": "s3:DeleteObject",

"Resource": "arn:aws:s3:::bug_reports/*",

"Condition": {

"StringNotEquals": {

"aws:UserAgent": [

"token1",

"token2"

]

}

}

},

{

"Sid": "Deny GET request from bug report user agent",

"Effect": "Deny",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::bug_reports/*",

"Condition": {

"StringEquals": {

"aws:UserAgent": "token1"

}

}

},

{

"Sid": "Deny GET request via http",

"Effect": "Deny",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::bug_reports/*",

"Condition": {

"Bool": {

"aws:SecureTransport": "false"

}

}

}

]

}

I suggest writing functional tests to verify that all authentication combinations work as expected.

The S3 logs can also be sent to a log analysis program such as Sagan or OSSEC. Should the tokens become compromised we can write rules to alert us and detect the abuse. Once detected we can act upon it such as denying the attacker's IP address in automated fashion and begin changing the tokens.

Lambda:

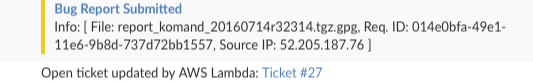

The Lambda service provided by Amazon makes it easy to execute code when a AWS event occurs from a supported AWS service. S3 uploads can generate events which can be used to perform tasks such as getting the path of the file in S3 and making API calls to a 3rd party service. The s3:PutObject action occurs when there's a HTTP PUT request. We use this event to fire off code which creates a ticket using our ticketing system's API and to attach the archive file to the ticket. Note that Lambda has temporary storage mounted as /tmp in your codes execution environment. We can retrieve the bug report archive from S3 and place it in temporary storage to perform our next set of actions.

The following python snippet is executed by Lambda once uploaded and configured. Lambda passes two data structures, one named event, the other context, to our python program's main function. These are used to obtain runtime information such as the event that caused Lambda to execute the code.

def main(event, context):

# Description

desc = 'A bug report has been submitted to S3.'

# Bucket Name

bucket = event['Records'][0]['s3']['bucket']['name']

# File name

f = event['Records'][0]['s3']['object']['key']

# Source IP of request

s = event['Records'][0]['requestParameters']['sourceIPAddress']

# Request ID

c = context.aws_request_id

# Put it together

description = 'Info: [ File: %s, Req. ID: %s, Source IP: %s ]' % (f, c, s)

getfile(bucket, f) # Download file to Lambda temp storage from S3

ftoken = uploadfile(f) # Upload file to ticketing system and return file token

tid = createticket(description) # Create ticket and return ticket id

attachfile(tid, ftoken) # Attach file to ticket

rmfile(f) # Remove file from lambda storage

if __name__ == "__main__":

main(event, context)

Bug Report:

This final part is the most important piece. For a good bug report we need to make reasonable assumptions about what is useful. Gather as much information as you can to determine the problem.

- Product logs

- System logs

- System information

- Operating system

- Kernel

- Hardware

- Problem description

- Contact information

The first 3 points can be automated but the last 2 will require user interaction. We suggest that when the user initiates a bug report submission via click or command, they be prompted for the missing details that will tie everything together.

The bug report archive should also be encrypted as another layer of defense if unauthorized access to the S3 bucket should occur. This way the information is unusable to the attacker and thus the data is still protected. GPG is a good choice for handling this in the bug reporting code.

Bug Reporter

Enter your e-mail address? [email protected]

Once more to verify. Enter your e-mail address? [email protected]

Enter a description of the problem (Press Enter and ^D to finish):

There seems to be issue in the UI when clicking on the Workflow button. Clicking once is not enough to execute the job but two clicks is.Please investigate this and thanks for this wonderful reporting feature!

^D

-> Executing logging of user input

-> Executing archival of logs

-> Executing encryption of logs

-> Executing upload

Submission complete

And voila, our team is notified.

Conclusion:

We can now save our users time and get feedback more quickly so that we're better able to help those that depend on us. Hopefully, you found this post valuable and can build upon and improve the idea.