Have you ever come into the office on a Monday and were completely surprised by your boss asking about some new public facing zero-day that was released over the weekend? How would they react if you had no idea what they were talking about? How would they react if you both knew about the new vulnerabilities, which assets were affected and already started the remediation process?

In this blog post, we are going to discuss an external scanning strategy that you will want to implement with your InsightVM deployment to help with this very question.

External scanning, public scanning, or attack surface scanning is when your external- or public-facing assets are scanned from an external scanning engine to get that hacker point of view. These systems are especially in danger, as they are exposed directly to the full force of internet-based hackers from around the world. For this reason, these external assets need to be prioritized for immediate resolution, especially when new critical vulnerabilities show up.

This is a five-part workflow, including Attack Surface Monitoring with Project Sonar, setting up an external scanning engine, site setup, alerting, automated actions, and vulnerability reporting/remediation.

Step 1: Set up an external scanning engine

There are a few different ways to get an engine that can scan your external attack surface from the hacker point of view. The easiest way is the Rapid7 shared hosted engine, which is as easy as a call to your CSM. You could also set up a scan engine in a remote data center or hosting provider. Just note that you will be sending traffic that looks like hacker activity, so make sure you get things squared away with the local ISP before scanning or you could get blocked.

I’ve also seen some customers use internal scan engines and set up static routes on all of the routers so that traffic ‘from’ that engine IP going ‘to’ the external IP range goes out the external routing interface. Basically, you have to bypass your internal network routing protocols. While it could work, I’ve also found that most networking teams refuse to help with setting it up this way. Without the static routes, if you try scanning external IPs from an internal engine, the traffic will most likely route through the internal interface to get to the external interface, which will not give you the hacker point of view on the asset.

In the end, you just need a scan engine that is connected to the InsightVM console that can get to your external attack surface.

Part 2: Set up a Sonar query and create a dynamic site

Sonar is a Rapid7 project and integration point that brings in assets that are exposed on the public internet. Rapid7 scans the entire internet weekly of all public-facing assets, collecting metadata from both scanning assets and correlation with global DNS servers. In the end, Rapid7 maintains a database in AWS that has an IP address column, hostname column, and domain column that can be queried by all licensed InsightVM consoles as a dynamic discovery connection.

Once you have a few queries set up and you’ve verified the assets are pretty much what you expected it's time to bring that “asset scope” into a “dynamic site.” Most sites are static, meaning they are being scoped by static IP ranges or addresses. With a dynamic site, it is scoped with a “dynamic connection” like Sonar that will automatically update the scope based on the queries based on any changes that happen in the Sonar database.

For step-by-step instructions on creating a site for Sonar assets, see InsightVM's Help Documentation

In the end, you will have an external site that will dynamically update scope with external attack surface discovery data that was found for you by Rapid7 Labs internet-based discovery scanning. We refer to this as Attack Surface Monitoring with Project Sonar.

Part 3: Set up site-based criticality alerts

This next part is probably the most important step that most folks probably have never seen: creating site-based alerts for new critical vulnerabilities.

Before you go and create an alert based on new vulnerabilities in a site, first you want to go and remediate any and all critical vulnerabilities in that “external” site. For any critical vulnerabilities you cannot remediate, I highly recommend putting in some sort of compensating control and excluding the vulnerability. The idea is that when this critical-only vulnerability alert is enabled, it is almost never triggered. We want to make this a “Valuable” alert.

A valuable alert is an alert that doesn’t annoy the recipient to the degree that they auto-filter the alert into the trash can. We want to make sure that this alert can go to a user’s primary inbox and when it triggers, it is worthy of being in that primary email box. This means that before enabling this alert, all critical vulnerabilities need to be remediated or excluded.

Part 4: Set up automated action based on new content

This part is almost as important as the critical alert step and is also another feature that most folks probably have never seen.

First, click the Automated Actions icon in the left navigation pane in InsightVM:

Then, once the page is open, click the New Action button to add a new Automated Action:

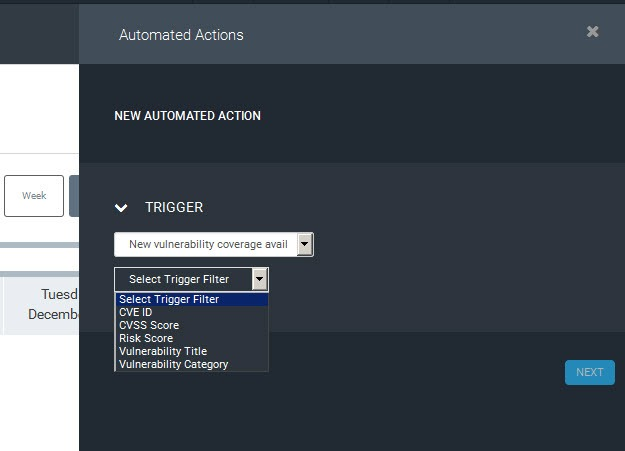

Next, we will click the dropdown and select “New Vulnerability Coverage Available” as the trigger. New Vulnerability Coverage Available works because Rapid7 changed the way content updates work, which allows the dev team to push out new updates any day, all week long, without restarting the console service. This means new content could be downloaded into your console whenever there is new content available, like new zero days!

After selecting “New Vulnerability Coverage Available,” next we will add a filter for only content with CVSS score “is higher than” 7. This means it will only run this automated action if new content comes in that has critical vulnerabilities in it.

When selecting the “Action” to take, select “Scan for new content,” then select your Sonar-based Dynamic Discovery site with your critical-only alerts set up. This will only kick off a scan if new vulnerability content downloads to the console via a content update or if there are critical vulnerabilities. It will also only kick off the new content so the scan will be very quick and lightweight.

This will allow you to have continuous coverage for critical vulnerabilities for your attack surface. This will also trigger the high-value, critical-only alerts if you happen to get one of those vulnerabilities, which could let you know about potential zero-days before your boss knows about them!

Part 5: Reporting (optional)

We could continue with this workflow by adding a reporting element to it. While you can pick any report, I usually go with the “Top Remediation” report. You’ll just scan the report with that site so it runs after every scan and select the filter option to only show critical vulnerabilities. Hopefully this report is always empty, but if you get this report and it’s not empty, it could be something you want to immediately action. The report and the alert could be used interchangeably.

Conclusion

At this point, we have come full circle in terms of addressing how your boss would react if you knew about new vulnerabilities and which assets were affected, and already kicked off the remediation process for new critical vulnerabilities that affect your attack surface.

Hopefully, after applying some of the steps in the above workflow, you can get this going and be more effective in deterring new zero-day attacks—whether you know they are coming or not.