Authored by Stuart Millar and Ryan Wilson.

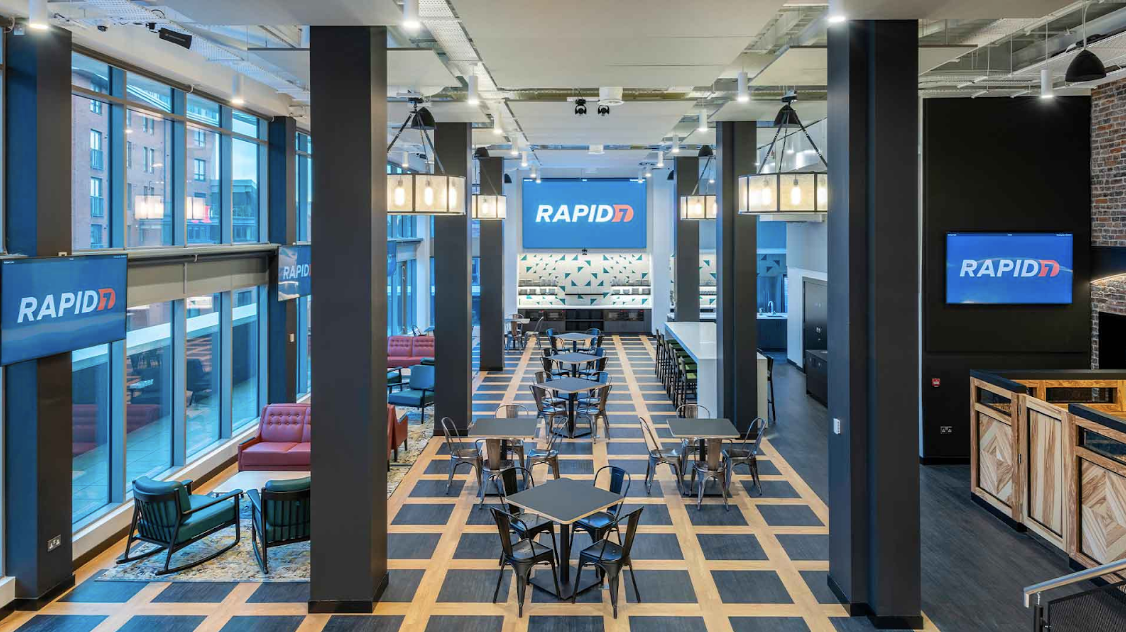

Rapid7 has expanded significantly in Belfast since establishing a presence back in 2014, resulting in the company's largest R&D hub outside the US with over 350 people spread across eight floors in our Chichester Street office. There is a wide range of product development and engineering across the entire Rapid7 platform that happens here, but nearest and dearest to our hearts is that Belfast has really become the epicentre for our more than two decades of investment in data. It has formed the bedrock for our AI, machine learning and data science efforts.

Read on to find out more about the importance of data and AI at Rapid7!

A Forward-thinking Data Attitude

First up let’s talk data. We’ve had a specialist data presence in Belfast for a number of years, initially focused on the consumption, distribution, and analytics for quality product usage data, via interfaces such as Amazon SNS/SQS, piping data into time-series data stores like TimescaleDB and InfluxDB. Product usage data is unique due to its high volume and cardinality, which these data stores are optimized for. The evolution of data at Rapid7 required more scale, so we’ve been introducing more scalable technologies such as Apache Kafka, Spark, and Iceberg. This stack will enable multiple entry points for access to our data.

- Apache Kafka, the heart of our data infrastructure, is a distributed streaming platform allowing us to handle real-time data streams with ease. Kafka acts as a reliable and scalable pipeline, ingesting massive amounts of data from various sources in real-time. Its pub-sub architecture ensures data is efficiently distributed across the system, with multiple types of consumers per topic enabling teams to process and analyze information as it flows.

- Transforms run via Apache Spark, serving as the processing engine taking our data to the next level, opening us up to either batch processing or streaming directly from Kafka where we ultimately land the data into an object store with the Iceberg layer on top.

- Apache Iceberg is an open table format for large-scale datasets, providing ACID transactions, schema evolution, and time travel capabilities. These features are instrumental in maintaining consistency and reliability of our data, which is crucial for AI and analytics at Rapid7. Additionally, the ability to perform time travel queries with Iceberg allows us to analyze and curate historical data, an essential component in building predictive AI models.

Our Engineers are constantly developing applications on this stack to facilitate ETL pipelines in languages such as Python, Java and Scala, running within K8s clusters. In keeping with our forward-thinking attitude to data, we continue to adopt new tooling and governance to facilitate growth, such as the introduction of a Data Catalog to visualize lineage with a searchable interface for metadata. In this way, users self-discover data but also learn how specific datasets are related, giving a clearer understanding of usage. All this empowers data and AI Engineers to discover and ingest the vast pools of data available at Rapid7.

Our Data-Centric Approach to AI

At the core of our AI engineering is a data-centric approach, in line with the recent gradual trend away from model-centric approaches. We find model design isn’t always the differentiator: more often it’s all about the data. In our experience when comparing different models for a classification task, say for example a set of neural networks plus some conventional classifiers, they may all perform fairly similarly with high-quality data. With data front and centre at Rapid7 as a key part of our overall strategy, major opportunities exist for us to leverage high-quality, high-volume datasets in our AI solutions, leading to better results.

Of course, there are always cases where model design is more influential. However marginal performance gains, often seen in novelty-focused academia, may not be worth the extra implementation effort or compute expense in practice. Not to say we aren’t across new models and architectures - we review them every week - though the classic saying “avoid unnecessary complexity” also applies to AI.

The Growing Importance of Data in GenAI

Let’s take GenAI LLMs as an example. Anyone following developments may have noticed training data is getting more of the spotlight, again indicative of the data-centric approach. LLMs, based on transformer architectures, have been getting bigger, and vendors are PRing the latest models hard. Look closely though and they tend to be trained with different datasets, often in combination with public benchmark data too. However, if two or three LLMs from different vendors were trained with exactly the same data for long enough we’re willing to bet the performance differences between them might be minimal. Further, we’re seeing researchers push in the other direction, trying to create smaller, less complex open-source LLMs that compare favourably to their much larger commercial counterparts.

Considering this data-centric shift, more complex models in general may not drive performance as much as you think. The same principles apply to more conventional analytics, machine learning and data science. Some models we’ve trained also have very small datasets, maybe only 100-200 examples, yet generalise well due to accurately labelled data that is representative of the wild. Huge datasets are at risk of duplicates or mislabelled examples, essentially significant noise, so it’s about data quality and quantity. Thankfully we have both in abundance, and our data is of a scale you’re unlikely to find elsewhere.

Expanding our AI Centre of Excellence in Belfast

Rapid7 is further investing in Belfast as part of our new AI Centre of Excellence, encompassing the full range of AI, ML and data science. We’re on a mission to use data and AI to accelerate threat investigation, detection and response (D&R) capabilities of our Security Operations Centre (SOC). The AI CoE partners with our data and D&R teams in enabling customers to assess risk, detect threats and automate their security programs. It ensures AI, ML and data science are applied meaningfully to add customer value, best achieve business objectives and deliver ROI. Unnecessary complexity is avoided, with a creative, fast-fail, highly iterative approach to accelerate ideas from proof-of-concept to go or no-go.

The group's make-up is such that our technical skills complement one another; we share our AI, ML and data science knowledge while also contributing on occasion to new external AI policy initiatives with recognised bodies like NIST. We use, for example, a mix of LLMs, sklearn, PyTorch and more, whilst the team has a track record of publishing award-winning research at the likes of AISec at ACM CCS and with IEEE. All of this is powered by data, and the AI CoE’s goals are ambitious.

Interested in joining us?

We are always interested in hearing from people with a desire to be part of something big. If you want a career move where you can grow and make an impact with data and AI, this is it. We’re currently recruiting for multiple brand-new data and AI roles in Belfast across various levels of seniority as we expand both teams - and we’d love to hear from you! Please check out the roles over here!